Compare commits

4 Commits

9868c823a6

...

51f55e8d07

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

51f55e8d07 | ||

|

|

bbe52da9bb | ||

|

|

aa1527ccb5 | ||

|

|

e5109b1f9b |

22

README.md

22

README.md

@ -3,13 +3,17 @@ A monorepo for all my projects and dotfiles. Hosted on [my Gitea](https://gitea.

|

|||||||

|

|

||||||

## Map of Contents

|

## Map of Contents

|

||||||

|

|

||||||

| Project | Summary | Path |

|

| Project | Summary |

|

||||||

|:-------------------:|:-------:|:----:|

|

|:----------------------:|:-------:|

|

||||||

| homelab | Configuration and documentation for my homelab. | [`homelab/`](homelab/) |

|

| [dotfiles](/dotfiles/) | Configuration and documentation for my PCs. |

|

||||||

| nix | Nix flake defining my PC & k3s cluster configurations | [`nix`](nix/) |

|

| [homelab](/homelab/) | Configuration and documentation for my homelab. |

|

||||||

| Jafner.dev | Hugo static site configuration files for my [Jafner.dev](https://jafner.dev) blog. | [`blog/`](blog/) |

|

| [projects](/projects/) | Self-contained projects in a variety of scripting and programming languages. |

|

||||||

| razer-bat | Indicate Razer mouse battery level with the RGB LEDs on the dock. Less metal than it sounds. | [`projects/razer-bat/`](projects/razer-bat/) |

|

| [sites](/sites/) | Static site files |

|

||||||

| 5etools-docker | Docker image to make self-hosting 5eTools a little bit better. | [`projects/5etools-docker/`](projects/5etools-docker/) |

|

| [.gitea/workflows](/.gitea/workflows/) & [.github/workflows](/.github/workflows/) | GitHub Actions workflows running on [Gitea](https://gitea.jafner.tools/Jafner/Jafner.net/actions) and [GitHub](https://github.com/Jafner/Jafner.net/actions), respectively. |

|

||||||

| 5eHomebrew | 5eTools-compatible homebrew content by me. | [`projects/5ehomebrew/`](projects/5ehomebrew/) |

|

| [.sops](/.sops/) | Scripts and documentation implementing [sops](https://github.com/getsops/sops) to securely store secrets in this repo. |

|

||||||

| archive | Old, abandoned, unmaintained projects. Fun to look back at. | [`archive/`](archive/) |

|

|

||||||

|

|

||||||

|

## LICENSE: MIT License

|

||||||

|

> See [LICENSE](/LICENSE) for details.

|

||||||

|

|

||||||

|

## Contributing

|

||||||

|

Presently this project is a one-man operation with no external contributors. All contributions will be addressed in good faith on a best-effort basis.

|

||||||

@ -1,2 +0,0 @@

|

|||||||

DOMAIN=

|

|

||||||

EMAIL=

|

|

||||||

@ -1,77 +0,0 @@

|

|||||||

# Project No Longer Maintained

|

|

||||||

> There are better ways to do this.

|

|

||||||

> I recommend looking for general-purpose guides to self-hosting with Docker and Traefik. For 5eTools specifically, I do maintain [5etools-docker](https://github.com/Jafner/5etools-docker).

|

|

||||||

|

|

||||||

This guide will walk you through setting up an Oracle Cloud VM to host a personal instance of 5eTools using your own domain.

|

|

||||||

|

|

||||||

# Before Getting Started

|

|

||||||

|

|

||||||

1. You will need a domain! I used NameCheap to purchase my `.tools` domain for $7 per year.

|

|

||||||

2. You will need an [Oracle Cloud](https://www.oracle.com/cloud/) account - This will be used to create an Always Free cloud virtual machine, which will host the services we need. You will need to attach a credit card to your account. I used a [Privacy.com](https://privacy.com/) temporary card to ensure I wouldn't be charged accidentally at the end of the 30-day trial. The services used in this guide are under Oracle's Always Free category, so unless you exceed the 10TB monthly traffic alotment, you won't be charged.

|

|

||||||

3. You will need a [Cloudflare](https://www.cloudflare.com/) account - This will be used to manage the domain name after purchase. You will need to migrate your domain from the registrar you bought the domain from to Cloudflare.

|

|

||||||

4. An SSH terminal (I use Tabby (formerly Terminus)). This will be used to log into and manage the Oracle Cloud virtual machine.

|

|

||||||

|

|

||||||

# Walkthrough

|

|

||||||

|

|

||||||

## Purchase a domain name from a domain registrar.

|

|

||||||

I used NameCheap, which offered my `.tools` domain for $7 per year. Some top-level domains (TLDs) can be purchased for as little as $2-3 per year (such as `.xyz`, `.one`, or `.website`). Warning: these are usually 1-year special prices, and the price will increase significantly after the first year.

|

|

||||||

|

|

||||||

## Migrate your domain to Cloudflare.

|

|

||||||

The Cloudflare docs have a [domain transfer guide](https://developers.cloudflare.com/registrar/domain-transfers/transfer-to-cloudflare), which addresses how to do this. This process may take up to 24 hours. Cloudflare won't like that you are importing the domain without any DNS records, but that's okay.

|

|

||||||

|

|

||||||

## Create your Oracle Cloud virtual machine.

|

|

||||||

If you've already created your Oracle Cloud account, go to the [Oracle Cloud portal](https://cloud.oracle.com). Then under the "Launch Resources" section, click "Create a VM instance". Most of the default settings are fine. Click "Change Image" and uncheck Oracle Linux, then check Cannonical Ubuntu, then click "Select Image". Under "Add SSH keys", download the private key for the instance by clicking the "Save Private Key" button. Finally, click "Create". You will need to wait a while for the instance to come online.

|

|

||||||

|

|

||||||

## Move your SSH key.

|

|

||||||

Move your downloaded SSH key to your `.ssh` folder with `mkdir ~/.ssh/` and then `mv ~/Downloads/ssh-key-*.key ~/.ssh/`. If you already have a process for SSH key management, feel free to ignore this.

|

|

||||||

|

|

||||||

## SSH into your VM.

|

|

||||||

Once your Oracle Cloud VM is provisioned (created), SSH into it. Get its public IP address from the "Instance Access" section of the instance's details page. Then run `ssh -i ~/.ssh/ssh-key-<YOUR-KEY>.key ubuntu@<YOUR-INSTANCE-IP>`, replacing "<YOUR-KEY>" and "<YOUR-INSTANCE-IP>" with your key name and instance IP. (Tip: you can use tab to auto-complete the filename of the key). Then enter the command to connect to the instance.

|

|

||||||

|

|

||||||

## Set up the VM with all the software we need.

|

|

||||||

Now that we're in the terminal, you can just copy-paste commands to run. You can either run the following command (which is just a bunch of commands strung together), or run each command one at a time by following the lettered instructions below:

|

|

||||||

|

|

||||||

`sudo apt-get update && sudo apt-get upgrade -y && sudo apt-get -y install git docker docker-compose && sudo systemctl enable docker && sudo usermod -aG docker $USER && logout` OR:

|

|

||||||

|

|

||||||

### Update the system.

|

|

||||||

with `sudo apt-get update && sudo apt-get upgrade -y`.

|

|

||||||

|

|

||||||

### Install Git, Docker, and Docker Compose.

|

|

||||||

with `sudo apt-get -y install git docker docker-compose`.

|

|

||||||

|

|

||||||

### Enable the docker service.

|

|

||||||

with `sudo systemctl enable docker`.

|

|

||||||

|

|

||||||

### Add your user to the docker group.

|

|

||||||

with `sudo usermod -aG docker $USER`.

|

|

||||||

|

|

||||||

### Log out.

|

|

||||||

with `logout`.

|

|

||||||

|

|

||||||

## Configure the VM firewall.

|

|

||||||

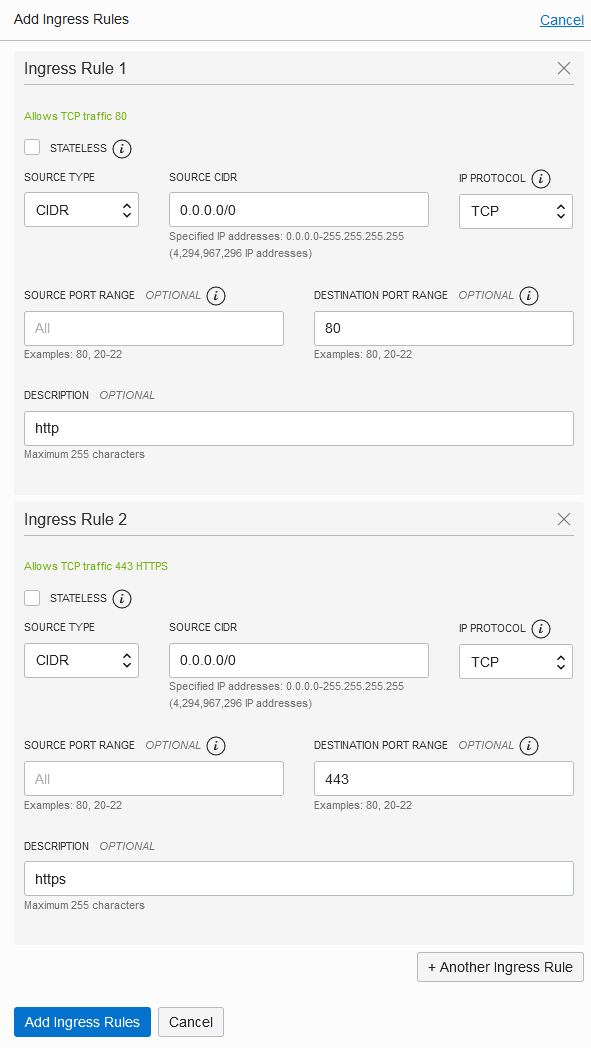

On the "Compute -> Instances -> Instance Details" page, under "Instance Information -> Primary VNIC -> Subnet", click the link to the subnet's configuration page, then click on the default security list. Click "Add Ingress Rules", then "+ Another Ingress Rule" and fill out your ingress rules like this:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

This will allow incoming traffic from the internet on ports 80 and 443 (the ports used by HTTP and HTTPS respectively).

|

|

||||||

|

|

||||||

## Configure the Cloudflare DNS records.

|

|

||||||

After your domain has been transferred to Cloudflare, log into the [Cloudflare dashboard](https://dash.cloudflare.com) and click on your domain. Then click on the DNS button at the top, and click "Add record" with the following information:

|

|

||||||

|

|

||||||

* Type: A

|

|

||||||

* Name: 5e

|

|

||||||

* IPv4 Address: <YOUR-INSTANCE-IP>

|

|

||||||

* TTL: Auto

|

|

||||||

* Proxy status: DNS only

|

|

||||||

|

|

||||||

This will route `5e.your.domain` to <YOUR-INSTANCE-IP>. You can change the name to whatever you prefer, or use @ to use the root domain (just `your.domain`) instead. I found that using Cloudflare's proxy interferes with acquiring certificates.

|

|

||||||

|

|

||||||

## Log back into your VM and set up the services.

|

|

||||||

Clone this repository onto the host with `git clone https://github.com/jafner/cloud_tools.git`, then move into the directory with `cd cloud_tools/`. Edit the file `.env` with your domain (including subdomain) and email. For example:

|

|

||||||

|

|

||||||

```

|

|

||||||

DOMAIN=5e.your.domain

|

|

||||||

EMAIL=youremail@gmail.com

|

|

||||||

```

|

|

||||||

|

|

||||||

Make the setup script executable, then run it with `chmod +x setup.sh && ./setup.sh`.

|

|

||||||

@ -1,29 +0,0 @@

|

|||||||

version: "3"

|

|

||||||

services:

|

|

||||||

traefik:

|

|

||||||

container_name: traefik

|

|

||||||

image: traefik:latest

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

ports:

|

|

||||||

- 80:80

|

|

||||||

- 443:443

|

|

||||||

volumes:

|

|

||||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

|

||||||

- ./traefik.toml:/traefik.toml

|

|

||||||

- ./acme.json:/acme.json

|

|

||||||

|

|

||||||

5etools:

|

|

||||||

container_name: 5etools

|

|

||||||

image: jafner/5etools-docker

|

|

||||||

volumes:

|

|

||||||

- ./htdocs:/usr/local/apache2/htdocs

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.5etools.rule=Host(`$DOMAIN`)

|

|

||||||

- traefik.http.routers.5etools.tls.certresolver=lets-encrypt

|

|

||||||

|

|

||||||

networks:

|

|

||||||

web:

|

|

||||||

external: true

|

|

||||||

Binary file not shown.

|

Before Width: | Height: | Size: 31 KiB |

@ -1,7 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

docker network create web

|

|

||||||

sed -i "s/email = \"\"/email = \"$EMAIL\"/g" traefik.toml

|

|

||||||

mkdir -p ./htdocs/download

|

|

||||||

touch acme.json

|

|

||||||

chmod 600 acme.json

|

|

||||||

docker-compose up -d

|

|

||||||

@ -1,18 +0,0 @@

|

|||||||

[entryPoints]

|

|

||||||

[entryPoints.web]

|

|

||||||

address = ":80"

|

|

||||||

[entryPoints.web.http.redirections.entryPoint]

|

|

||||||

to = "websecure"

|

|

||||||

scheme = "https"

|

|

||||||

[entryPoints.websecure]

|

|

||||||

address = ":443"

|

|

||||||

|

|

||||||

[certificatesResolvers.lets-encrypt.acme]

|

|

||||||

email = ""

|

|

||||||

storage = "acme.json"

|

|

||||||

caServer = "https://acme-v02.api.letsencrypt.org/directory"

|

|

||||||

[certificatesResolvers.lets-encrypt.acme.tlsChallenge]

|

|

||||||

|

|

||||||

[providers.docker]

|

|

||||||

watch = true

|

|

||||||

network = "web"

|

|

||||||

@ -1,19 +0,0 @@

|

|||||||

# Assign test file names/paths

|

|

||||||

SOURCE_FILE=$(realpath ~/Git/Clip/TestClips/"x264Source.mkv") && echo "SOURCE_FILE: $SOURCE_FILE"

|

|

||||||

TRANSCODED_FILE="$(realpath ~/Git/Clip/TestClips)/TRANSCODED.mp4" && echo "TRANSCODED_FILE: $TRANSCODED_FILE"

|

|

||||||

|

|

||||||

# TRANSCODE $SOURCE_FILE into 'TRANSCODED.mp4'

|

|

||||||

ffmpeg -hide_banner -i "$SOURCE_FILE" -copyts -copytb 0 -map 0 -bf 0 -c:v libx264 -crf 23 -preset slow -video_track_timescale 1000 -g 60 -keyint_min 60 -bsf:v setts=ts=STARTPTS+N/TB_OUT/60 -c:a copy "$TRANSCODED_FILE"

|

|

||||||

|

|

||||||

# GET KEYFRAMES FROM $SOURCE_FILE

|

|

||||||

KEYFRAMES=( $(ffprobe -hide_banner -loglevel error -select_streams v:0 -show_entries packet=pts,flags -of csv=print_section=0 "$SOURCE_FILE" | grep K | cut -d',' -f 1) ) && echo "$KEYFRAMES"

|

|

||||||

ffprobe -hide_banner -loglevel error -select_streams v:0 -show_entries packet=pts,flags -of csv=print_section=0 "$SOURCE_FILE"

|

|

||||||

|

|

||||||

# GET KEYFRAMES FROM $TRANSCODED_FILE

|

|

||||||

KEYFRAMES=( $(ffprobe -hide_banner -loglevel error -select_streams v:0 -show_entries packet=pts,flags -of csv=print_section=0 "$TRANSCODED_FILE" | grep K | cut -d',' -f 1) ) && echo "$KEYFRAMES"

|

|

||||||

ffprobe -hide_banner -loglevel error -select_streams v:0 -show_entries packet=pts,flags -of csv=print_section=0 "$TRANSCODED_FILE"

|

|

||||||

|

|

||||||

# Compare keyframes between $SOURCE_FILE and $TRANSCODED_FILE

|

|

||||||

sdiff <(ffprobe -hide_banner -loglevel error -select_streams v:0 -show_entries packet=pts,flags -of csv=print_section=0 "$SOURCE_FILE") <(ffprobe -hide_banner -loglevel error -select_streams v:0 -show_entries packet=pts,flags -of csv=print_section=0 "$TRANSCODED_FILE")

|

|

||||||

|

|

||||||

https://code.videolan.org/videolan/x264/-/blob/master/common/base.c#L489

|

|

||||||

@ -1,7 +0,0 @@

|

|||||||

{

|

|

||||||

"folders": [

|

|

||||||

{

|

|

||||||

"path": "."

|

|

||||||

}

|

|

||||||

]

|

|

||||||

}

|

|

||||||

@ -1,262 +0,0 @@

|

|||||||

import tkinter as tk # https://docs.python.org/3/library/tkinter.html

|

|

||||||

from tkinter import filedialog, ttk

|

|

||||||

import av

|

|

||||||

import subprocess

|

|

||||||

from pathlib import Path

|

|

||||||

import time

|

|

||||||

import datetime

|

|

||||||

from PIL import Image, ImageTk, ImageOps

|

|

||||||

from RangeSlider.RangeSlider import RangeSliderH

|

|

||||||

from ffpyplayer.player import MediaPlayer

|

|

||||||

from probe import get_keyframes_list, get_keyframe_interval, get_video_duration

|

|

||||||

|

|

||||||

class VideoClipExtractor:

|

|

||||||

def __init__(self, master):

|

|

||||||

# Initialize variables

|

|

||||||

self.video_duration = int() # milliseconds

|

|

||||||

self.video_path = Path() # Path object

|

|

||||||

self.video_keyframes = list() # list of ints (keyframe pts in milliseconds)

|

|

||||||

self.clip_start = tk.IntVar(value = 0) # milliseconds

|

|

||||||

self.clip_end = tk.IntVar(value = 1) # milliseconds

|

|

||||||

|

|

||||||

self.preview_image_timestamp = tk.IntVar(value = 0) # milliseconds

|

|

||||||

|

|

||||||

self.debug_checkvar = tk.IntVar() # Checkbox variable

|

|

||||||

|

|

||||||

self.background_color = "#BBBBBB"

|

|

||||||

self.text_color = "#000000"

|

|

||||||

self.preview_background_color = "#2222FF"

|

|

||||||

|

|

||||||

# Set up master UI

|

|

||||||

self.master = master

|

|

||||||

self.master.title("Video Clip Extractor")

|

|

||||||

self.master.configure(background=self.background_color)

|

|

||||||

self.master.resizable(False, False)

|

|

||||||

self.master.geometry("")

|

|

||||||

self.window_max_width = self.master.winfo_screenwidth()*0.75

|

|

||||||

self.window_max_height = self.master.winfo_screenheight()*0.75

|

|

||||||

self.preview_width = 1280

|

|

||||||

self.preview_height = 720

|

|

||||||

self.preview_image = Image.new("RGB", (self.preview_width, self.preview_height), color=self.background_color)

|

|

||||||

self.preview_image_tk = ImageTk.PhotoImage(self.preview_image)

|

|

||||||

|

|

||||||

self.timeline_width = self.preview_width

|

|

||||||

self.timeline_height = 64

|

|

||||||

|

|

||||||

self.interface_width = self.preview_width

|

|

||||||

self.interface_height = 200

|

|

||||||

|

|

||||||

# Initialize frames, buttons and labels

|

|

||||||

self.preview_frame = tk.Frame(self.master, width=self.preview_width, height=self.preview_height, bg=self.preview_background_color, borderwidth=0, bd=0)

|

|

||||||

self.timeline_frame = tk.Frame(self.master, width=self.timeline_width, height=self.timeline_height, bg=self.background_color)

|

|

||||||

self.interface_pane = tk.Frame(self.master, width=self.interface_width, height=self.interface_height, bg=self.background_color)

|

|

||||||

self.buttons_pane = tk.Frame(self.interface_pane, bg=self.background_color)

|

|

||||||

self.info_pane = tk.Frame(self.interface_pane, bg=self.background_color)

|

|

||||||

|

|

||||||

self.preview_canvas = tk.Canvas(self.preview_frame, width=self.preview_width, height=self.preview_height, bg=self.preview_background_color, borderwidth=0, bd=0)

|

|

||||||

self.browse_button = tk.Button(self.buttons_pane, text="Browse...", command=self.browse_video_file, background=self.background_color, foreground=self.text_color)

|

|

||||||

self.extract_button = tk.Button(self.buttons_pane, text="Extract Clip", command=self.extract_clip, background=self.background_color, foreground=self.text_color)

|

|

||||||

self.debug_checkbutton = tk.Checkbutton(self.buttons_pane, text="Print ffmpeg to console", variable=self.debug_checkvar, background=self.background_color, foreground=self.text_color)

|

|

||||||

self.preview_button = tk.Button(self.buttons_pane, text="Preview Clip", command=self.ffplaySegment, background=self.background_color, foreground=self.text_color)

|

|

||||||

self.video_path_label = tk.Label(self.info_pane, text=f"Source video: {self.video_path}", background=self.background_color, foreground=self.text_color)

|

|

||||||

self.clip_start_label = tk.Label(self.timeline_frame, text=f"{self.timeStr(self.clip_start.get())}", background=self.background_color, foreground=self.text_color)

|

|

||||||

self.clip_end_label = tk.Label(self.timeline_frame, text=f"{self.timeStr(self.clip_end.get())}", background=self.background_color, foreground=self.text_color)

|

|

||||||

self.video_duration_label = tk.Label(self.info_pane, text=f"Video duration: {self.timeStr(self.video_duration)}", background=self.background_color, foreground=self.text_color)

|

|

||||||

self.timeline_canvas = tk.Canvas(self.timeline_frame, width=self.preview_width, height=self.timeline_height, background=self.background_color)

|

|

||||||

self.timeline = RangeSliderH(

|

|

||||||

self.timeline_canvas,

|

|

||||||

[self.clip_start, self.clip_end],

|

|

||||||

max_val=max(self.video_duration,1),

|

|

||||||

show_value=False,

|

|

||||||

bgColor=self.background_color,

|

|

||||||

Width=self.timeline_width,

|

|

||||||

Height=self.timeline_height

|

|

||||||

)

|

|

||||||

self.preview_label = tk.Label(self.preview_frame, image=self.preview_image_tk)

|

|

||||||

|

|

||||||

print(f"Widget widths (after pack):\n\

|

|

||||||

self.clip_start_label.winfo_width(): {self.clip_start_label.winfo_width()}\n\

|

|

||||||

self.clip_end_label.winfo_width(): {self.clip_end_label.winfo_width()}\n\

|

|

||||||

self.timeline.winfo_width(): {self.timeline.winfo_width()}\n\

|

|

||||||

")

|

|

||||||

|

|

||||||

# Arrange frames inside master window

|

|

||||||

self.preview_frame.pack(side='top', fill='both', expand=True, padx=0, pady=0)

|

|

||||||

self.timeline_frame.pack(fill='x', expand=True, padx=20, pady=20)

|

|

||||||

self.interface_pane.pack(side='bottom', fill='both', expand=True, padx=10, pady=10)

|

|

||||||

self.buttons_pane.pack(side='left')

|

|

||||||

self.info_pane.pack(side='right')

|

|

||||||

|

|

||||||

# Draw elements inside frames

|

|

||||||

self.browse_button.pack(side='top')

|

|

||||||

self.extract_button.pack(side='top')

|

|

||||||

self.preview_button.pack(side='top')

|

|

||||||

self.debug_checkbutton.pack(side='top')

|

|

||||||

self.video_path_label.pack(side='top')

|

|

||||||

self.clip_start_label.pack(side='left')

|

|

||||||

self.clip_end_label.pack(side='right')

|

|

||||||

self.video_duration_label.pack(side='top')

|

|

||||||

self.preview_label.pack(fill='both', expand=True)

|

|

||||||

|

|

||||||

# Draw timeline canvas and timeline slider

|

|

||||||

self.timeline_canvas.pack(fill="both", expand=True)

|

|

||||||

self.timeline.pack(fill="both", expand=True)

|

|

||||||

|

|

||||||

print(f"Widget widths (after pack):\n\

|

|

||||||

self.clip_start_label.winfo_width(): {self.clip_start_label.winfo_width()}\n\

|

|

||||||

self.clip_end_label.winfo_width(): {self.clip_end_label.winfo_width()}\n\

|

|

||||||

self.timeline.winfo_width(): {self.timeline.winfo_width()}\n\

|

|

||||||

")

|

|

||||||

|

|

||||||

def getThumbnail(self):

|

|

||||||

with av.open(str(self.video_path)) as container:

|

|

||||||

time_ms = self.clip_start.get() # This works as long as container has a timebase of 1/1000

|

|

||||||

container.seek(time_ms, stream=container.streams.video[0])

|

|

||||||

time.sleep(0.1)

|

|

||||||

frame = next(container.decode(video=0)) # Get the frame object for the seeked timestamp

|

|

||||||

if self.preview_image_timestamp != time_ms:

|

|

||||||

self.preview_image_tk = ImageTk.PhotoImage(frame.to_image(width=self.preview_width, height=self.preview_height)) # Convert the frame object to an image

|

|

||||||

self.preview_label.config(image=self.preview_image_tk)

|

|

||||||

self.preview_image_timestamp = time_ms

|

|

||||||

|

|

||||||

def ffplaySegment(self):

|

|

||||||

ffplay_command = [

|

|

||||||

"ffplay",

|

|

||||||

"-hide_banner",

|

|

||||||

"-autoexit",

|

|

||||||

"-volume", "10",

|

|

||||||

"-window_title", f"{self.timeStr(self.clip_start.get())} to {self.timeStr(self.clip_end.get())}",

|

|

||||||

"-x", "1280",

|

|

||||||

"-y", "720",

|

|

||||||

"-ss", f"{self.clip_start.get()}ms",

|

|

||||||

"-i", str(self.video_path),

|

|

||||||

"-t", f"{self.clip_end.get() - self.clip_start.get()}ms"

|

|

||||||

]

|

|

||||||

print("Playing video. Press \"q\" or \"Esc\" to exit.")

|

|

||||||

print("")

|

|

||||||

subprocess.run(ffplay_command, stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL)

|

|

||||||

|

|

||||||

|

|

||||||

def redrawTimeline(self):

|

|

||||||

self.timeline.forget()

|

|

||||||

step_size = get_keyframe_interval(self.video_keyframes)

|

|

||||||

step_marker = False

|

|

||||||

if len(self.video_keyframes) < self.timeline_width/4 and step_size > 0:

|

|

||||||

step_marker = True

|

|

||||||

self.timeline = RangeSliderH(

|

|

||||||

self.timeline_canvas,

|

|

||||||

[self.clip_start, self.clip_end],

|

|

||||||

max_val=max(self.video_duration,1),

|

|

||||||

step_marker=step_marker,

|

|

||||||

step_size=step_size,

|

|

||||||

show_value=False,

|

|

||||||

bgColor=self.background_color,

|

|

||||||

Width=self.timeline_width,

|

|

||||||

Height=self.timeline_height

|

|

||||||

)

|

|

||||||

self.timeline.pack()

|

|

||||||

#self.preview_canvas.create_text(self.preview_canvas.winfo_width() // 2, self.preview_canvas.winfo_height() // 2, text=f"Loading video...", fill="black", font=("Helvetica", 48))

|

|

||||||

|

|

||||||

|

|

||||||

def timeStr(self, milliseconds: int): # Takes milliseconds int or float and returns a string in the preferred format

|

|

||||||

h = int(milliseconds/3600000) # Get the hours component

|

|

||||||

m = int((milliseconds%3600000)/60000) # Get the minutes component

|

|

||||||

s = int((milliseconds%60000)/1000) # Get the seconds component

|

|

||||||

ms = int(milliseconds%1000) # Get the milliseconds component

|

|

||||||

if milliseconds < 60000:

|

|

||||||

return f"{s}.{ms:03}"

|

|

||||||

elif milliseconds < 3600000:

|

|

||||||

return f"{m}:{s:02}.{ms:03}"

|

|

||||||

else:

|

|

||||||

return f"{h}:{m:02}:{s:02}.{ms:03}"

|

|

||||||

|

|

||||||

def clip_selector(self):

|

|

||||||

def updateClipRange(var, index, mode):

|

|

||||||

clip_end = self.clip_end.get()

|

|

||||||

nearest_keyframe_start = self.nearest_keyframe(self.clip_start.get(), self.video_keyframes)

|

|

||||||

# Add a specific check to make sure that the clip end is not changing to be equal to or less than the clip start

|

|

||||||

if clip_end <= nearest_keyframe_start:

|

|

||||||

clip_end = nearest_keyframe_start + self.timeline.__dict__['step_size']

|

|

||||||

self.clip_start_label.config(text=f"{self.timeStr(nearest_keyframe_start)}")

|

|

||||||

self.clip_end_label.config(text=f"{self.timeStr(clip_end)}")

|

|

||||||

self.timeline.forceValues([nearest_keyframe_start, clip_end])

|

|

||||||

self.getThumbnail()

|

|

||||||

if str(self.video_path) == "()":

|

|

||||||

return False

|

|

||||||

self.clip_start.trace_add("write", callback=updateClipRange) # This actually triggers on both start and end

|

|

||||||

|

|

||||||

def nearest_keyframe(self, test_pts: int, valid_pts: list):

|

|

||||||

return(min(valid_pts, key=lambda x:abs(x-float(test_pts))))

|

|

||||||

|

|

||||||

def browse_video_file(self):

|

|

||||||

video_path = filedialog.askopenfilename(

|

|

||||||

initialdir="~/Git/Clip/TestClips/",

|

|

||||||

title="Select file",

|

|

||||||

filetypes=(("mp4/mkv files", '*.mp4 *.mkv'), ("all files", "*.*"))

|

|

||||||

)

|

|

||||||

print(f"video path: \"{video_path}\" (type: {type(video_path)})")

|

|

||||||

if not Path(str(video_path)).is_file():

|

|

||||||

return

|

|

||||||

video_keyframes = get_keyframes_list(video_path)

|

|

||||||

while video_keyframes == None:

|

|

||||||

print(f"No keyframes found in {video_path}. Choose a different video file.")

|

|

||||||

video_path = filedialog.askopenfilename(

|

|

||||||

initialdir="~/Git/Clip/TestClips/",

|

|

||||||

title="Select file",

|

|

||||||

filetypes=(("mp4/mkv files", '*.mp4 *.mkv'), ("all files", "*.*"))

|

|

||||||

)

|

|

||||||

# Once we have a video file, we need to set the Source video, Clip start, Clip end, and Video duration values and redraw the GUI.

|

|

||||||

self.video_path = Path(video_path)

|

|

||||||

self.video_duration = get_video_duration(video_path)

|

|

||||||

self.video_keyframes = video_keyframes

|

|

||||||

self.clip_start.set(min(self.video_keyframes))

|

|

||||||

self.clip_end.set(max(self.video_keyframes))

|

|

||||||

self.clip_start_label.config(text=f"{self.timeStr(self.nearest_keyframe(self.clip_start.get(), self.video_keyframes))}")

|

|

||||||

self.clip_end_label.config(text=f"{self.timeStr(self.clip_end.get())}")

|

|

||||||

|

|

||||||

self.getThumbnail()

|

|

||||||

|

|

||||||

self.video_path_label.config(text=f"Source video: {self.video_path}")

|

|

||||||

self.video_duration_label.config(text=f"Video duration: {self.timeStr(self.video_duration)}")

|

|

||||||

self.redrawTimeline()

|

|

||||||

self.clip_selector()

|

|

||||||

|

|

||||||

def extract_clip(self):

|

|

||||||

video_path = self.video_path

|

|

||||||

file_extension = video_path.suffix

|

|

||||||

clip_start = self.clip_start.get()

|

|

||||||

clip_end = self.clip_end.get()

|

|

||||||

|

|

||||||

output_path = Path(

|

|

||||||

filedialog.asksaveasfilename(

|

|

||||||

initialdir=video_path.parent,

|

|

||||||

initialfile=str(

|

|

||||||

f"[Clip] {video_path.stem} ({datetime.timedelta(milliseconds=clip_start)}-{datetime.timedelta(milliseconds=clip_end)}){file_extension}"),

|

|

||||||

title="Select output file",

|

|

||||||

defaultextension=file_extension

|

|

||||||

)

|

|

||||||

)

|

|

||||||

if output_path == Path("."):

|

|

||||||

return False

|

|

||||||

ffmpeg_command = [

|

|

||||||

"ffmpeg",

|

|

||||||

"-y", # The output path prompt asks for confirmation before overwriting

|

|

||||||

"-hide_banner",

|

|

||||||

"-i", str(video_path),

|

|

||||||

"-ss", f"{clip_start}ms",

|

|

||||||

"-to", f"{clip_end}ms",

|

|

||||||

"-map", "0",

|

|

||||||

"-c:v", "copy",

|

|

||||||

"-c:a", "copy",

|

|

||||||

str(output_path),

|

|

||||||

]

|

|

||||||

if self.debug_checkvar.get() == 1:

|

|

||||||

subprocess.run(ffmpeg_command)

|

|

||||||

else:

|

|

||||||

subprocess.run(ffmpeg_command, stdout=subprocess.DEVNULL, stderr=subprocess.DEVNULL)

|

|

||||||

print(f"Finished! Saved to {output_path}")

|

|

||||||

|

|

||||||

root = tk.Tk()

|

|

||||||

app = VideoClipExtractor(root)

|

|

||||||

root.mainloop()

|

|

||||||

|

|

||||||

@ -1,35 +0,0 @@

|

|||||||

# Version using ffPyPlayer

|

|

||||||

from pathlib import Path

|

|

||||||

import tkinter as tk

|

|

||||||

import time

|

|

||||||

from ffpyplayer.player import MediaPlayer

|

|

||||||

|

|

||||||

def ffplaySegment(file: Path, start: int, end: int):

|

|

||||||

print(f"Playing {file} from {start}ms to {end}ms")

|

|

||||||

file = str(file) # Must be string

|

|

||||||

seek_to = float(start)/1000

|

|

||||||

play_for = float(end - start)/1000

|

|

||||||

x = int(1280)

|

|

||||||

y = int(720)

|

|

||||||

volume = float(0.2) # Float 0.0 to 1.0

|

|

||||||

# Must be dict

|

|

||||||

ff_opts = {

|

|

||||||

"paused": False, # Bool

|

|

||||||

"t": play_for, # Float seconds

|

|

||||||

"ss": seek_to, # Float seconds

|

|

||||||

"x": x,

|

|

||||||

"y": y,

|

|

||||||

"volume": volume

|

|

||||||

}

|

|

||||||

val = ''

|

|

||||||

player = MediaPlayer(file, ff_opts=ff_opts)

|

|

||||||

while val != 'eof':

|

|

||||||

frame, val = player.get_frame()

|

|

||||||

print(f"frame: (type: {type(frame)})", end=', ')

|

|

||||||

if val != 'eof' and frame is not None:

|

|

||||||

img, t = frame

|

|

||||||

print(f"img: (type: {type(img)})", end=', ')

|

|

||||||

print(f"t: (type: {type(t)})")

|

|

||||||

# Use the create_image method of the canvas widget to draw the image to the canvas.

|

|

||||||

|

|

||||||

|

|

||||||

@ -1,43 +0,0 @@

|

|||||||

import subprocess

|

|

||||||

from pathlib import Path

|

|

||||||

import numpy as np

|

|

||||||

import ffmpeg

|

|

||||||

|

|

||||||

# Get a list of keyframes by pts (milliseconds from start) from a video file.

|

|

||||||

def get_keyframes_list(video_path):

|

|

||||||

ffprobe_command = [

|

|

||||||

"ffprobe",

|

|

||||||

"-hide_banner",

|

|

||||||

"-loglevel", "error",

|

|

||||||

"-skip_frame", "nokey",

|

|

||||||

"-select_streams", "v:0",

|

|

||||||

"-show_entries", "packet=pts,flags",

|

|

||||||

"-of", "csv=print_section=0",

|

|

||||||

video_path

|

|

||||||

]

|

|

||||||

|

|

||||||

ffprobe_output = subprocess.run(ffprobe_command, capture_output=True, text=True)

|

|

||||||

keyframes = list(map(int, np.array([line.split(",") for line in list(filter(lambda frame_packet: "K" in frame_packet, ffprobe_output.stdout.splitlines()))])[:,0]))

|

|

||||||

if len(keyframes) <= 1:

|

|

||||||

# Pop up a warning if there are no keyframes.

|

|

||||||

return(None)

|

|

||||||

return(keyframes)

|

|

||||||

|

|

||||||

def get_keyframe_interval(keyframes_list: list): # Takes a list of ints representing keyframe pts (milliseconds from start) and returns either the keyframe interval in milliseconds, or None if the keyframe intervals are not all the same.

|

|

||||||

intervals = list(np.diff(keyframes_list)) # List of keyframe intervals in milliseconds.

|

|

||||||

if np.all(intervals == intervals[0]):

|

|

||||||

return(intervals[0])

|

|

||||||

else:

|

|

||||||

return(0)

|

|

||||||

|

|

||||||

def get_keyframe_intervals(keyframes_list):

|

|

||||||

# Return a list of keyframe intervals in milliseconds.

|

|

||||||

return(list(np.diff(keyframes_list)))

|

|

||||||

|

|

||||||

def keyframe_intervals_are_clean(keyframe_intervals):

|

|

||||||

# Return whether the keyframe intervals are all the same.

|

|

||||||

return(np.all(keyframe_intervals == keyframe_intervals[0]))

|

|

||||||

|

|

||||||

# Get the duration of a video file in milliseconds (useful for ffmpeg pts).

|

|

||||||

def get_video_duration(video_path):

|

|

||||||

return int(float(ffmpeg.probe(video_path)["format"]["duration"])*1000)

|

|

||||||

@ -1,6 +0,0 @@

|

|||||||

av==12.0.0

|

|

||||||

ffmpeg-python==0.2.0

|

|

||||||

ffpyplayer==4.5.1

|

|

||||||

numpy==1.26.4

|

|

||||||

pillow==10.3.0

|

|

||||||

RangeSlider==2023.7.2

|

|

||||||

@ -1,21 +0,0 @@

|

|||||||

import av

|

|

||||||

|

|

||||||

with av.open("TestClips/x264Source.mkv") as container:

|

|

||||||

frame_num = 622

|

|

||||||

time_base = container.streams.video[0].time_base

|

|

||||||

framerate = container.streams.video[0].average_rate

|

|

||||||

timestamp = frame_num/framerate

|

|

||||||

rounded_pts = round((frame_num / framerate) / time_base)

|

|

||||||

print(f"Variables:\n\

|

|

||||||

frame_num: {frame_num} (type: {type(frame_num)}\n\

|

|

||||||

time_base: {time_base} (type: {type(time_base)}\n\

|

|

||||||

timestamp: {timestamp} (type: {type(timestamp)}\n\

|

|

||||||

frame_num / framerate: {frame_num / framerate}\n\

|

|

||||||

frame_num / time_base: {frame_num / time_base}\n\

|

|

||||||

(frame_num / framerate) / time_base: {(frame_num / framerate) / time_base}\n\

|

|

||||||

rounded_pts = {rounded_pts}\

|

|

||||||

")

|

|

||||||

|

|

||||||

container.seek(rounded_pts, backward=True, stream=container.streams.video[0])

|

|

||||||

frame = next(container.decode(video=0))

|

|

||||||

frame.to_image().save("TestClips/Thumbnail3.jpg".format(frame.pts), quality=80)

|

|

||||||

@ -1,3 +0,0 @@

|

|||||||

DOCKER_DATA=/home/joey/docker_data/arr

|

|

||||||

DOWNLOAD_DIR=/mnt/torrenting/NZB

|

|

||||||

INCOMPLETE_DOWNLOAD_DIR=/mnt/torrenting/NZB_incomplete

|

|

||||||

@ -1,82 +0,0 @@

|

|||||||

version: "3"

|

|

||||||

services:

|

|

||||||

radarr:

|

|

||||||

image: linuxserver/radarr

|

|

||||||

container_name: radarr

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

environment:

|

|

||||||

- PUID=1000

|

|

||||||

- PGID=1000

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

volumes:

|

|

||||||

- /mnt/nas/Video/Movies:/movies

|

|

||||||

- "${DOCKER_DATA}/radarr_config:/config"

|

|

||||||

- "${DOWNLOAD_DIR}:/downloads"

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.radarr.rule=Host(`radarr.jafner.net`)

|

|

||||||

- traefik.http.routers.radarr.tls.certresolver=lets-encrypt

|

|

||||||

- traefik.http.services.radarr.loadbalancer.server.port=7878

|

|

||||||

- traefik.http.routers.radarr.middlewares=lan-only@file

|

|

||||||

sonarr:

|

|

||||||

image: linuxserver/sonarr

|

|

||||||

container_name: sonarr

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

environment:

|

|

||||||

- PUID=1000

|

|

||||||

- PGID=1000

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

volumes:

|

|

||||||

- /mnt/nas/Video/Shows:/shows

|

|

||||||

- "${DOCKER_DATA}/sonarr_config:/config"

|

|

||||||

- "${DOWNLOAD_DIR}:/downloads"

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.sonarr.rule=Host(`sonarr.jafner.net`)

|

|

||||||

- traefik.http.routers.sonarr.tls.certresolver=lets-encrypt

|

|

||||||

- traefik.http.services.sonarr.loadbalancer.server.port=8989

|

|

||||||

- traefik.http.routers.sonarr.middlewares=lan-only@file

|

|

||||||

|

|

||||||

nzbhydra2:

|

|

||||||

image: linuxserver/nzbhydra2

|

|

||||||

container_name: nzbhydra2

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

environment:

|

|

||||||

- PUID=1000

|

|

||||||

- PGID=1000

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

volumes:

|

|

||||||

- "${DOCKER_DATA}/nzbhydra2_config:/config"

|

|

||||||

- "${DOWNLOAD_DIR}:/downloads"

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.nzbhydra2.rule=Host(`nzbhydra.jafner.net`)

|

|

||||||

- traefik.http.routers.nzbhydra2.tls.certresolver=lets-encrypt

|

|

||||||

- traefik.http.services.nzbhydra2.loadbalancer.server.port=5076

|

|

||||||

- traefik.http.routers.nzbhydra2.middlewares=lan-only@file

|

|

||||||

|

|

||||||

sabnzbd:

|

|

||||||

image: linuxserver/sabnzbd

|

|

||||||

container_name: sabnzbd

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

environment:

|

|

||||||

- PUID=1000

|

|

||||||

- PGID=1000

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

ports:

|

|

||||||

- 8085:8080

|

|

||||||

volumes:

|

|

||||||

- "${DOCKER_DATA}/sabnzbd_config:/config"

|

|

||||||

- "${DOWNLOAD_DIR}:/downloads"

|

|

||||||

- "${INCOMPLETE_DOWNLOAD_DIR}:/incomplete-downloads"

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.sabnzbd.rule=Host(`sabnzbd.jafner.net`)

|

|

||||||

- traefik.http.routers.sabnzbd.tls.certresolver=lets-encrypt

|

|

||||||

- traefik.http.services.sabnzbd.loadbalancer.server.port=8080

|

|

||||||

- traefik.http.routers.sabnzbd.middlewares=lan-only@file

|

|

||||||

|

|

||||||

networks:

|

|

||||||

web:

|

|

||||||

external: true

|

|

||||||

|

|

||||||

@ -1 +0,0 @@

|

|||||||

LIBRARY_DIR=/mnt/nas/Ebooks/Calibre

|

|

||||||

@ -1,40 +0,0 @@

|

|||||||

version: '3'

|

|

||||||

services:

|

|

||||||

calibre-web-rpg:

|

|

||||||

image: linuxserver/calibre-web

|

|

||||||

container_name: calibre-web-rpg

|

|

||||||

environment:

|

|

||||||

- PUID=1000

|

|

||||||

- PGID=1000

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

volumes:

|

|

||||||

- calibre-web-rpg_data:/config

|

|

||||||

- /mnt/calibre/rpg:/books

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.calibre-rpg.rule=Host(`rpg.jafner.net`)

|

|

||||||

- traefik.http.routers.calibre-rpg.tls.certresolver=lets-encrypt

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

|

|

||||||

calibre-web-sff:

|

|

||||||

image: linuxserver/calibre-web

|

|

||||||

container_name: calibre-web-sff

|

|

||||||

environment:

|

|

||||||

- PUID=1000

|

|

||||||

- PGID=1000

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

volumes:

|

|

||||||

- calibre-web-sff_data:/config

|

|

||||||

- /mnt/calibre/sff:/books

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.calibre.rule=Host(`calibre.jafner.net`)

|

|

||||||

- traefik.http.routers.calibre.tls.certresolver=lets-encrypt

|

|

||||||

networks:

|

|

||||||

- web

|

|

||||||

|

|

||||||

networks:

|

|

||||||

web:

|

|

||||||

external: true

|

|

||||||

volumes:

|

|

||||||

calibre-web-rpg_data:

|

|

||||||

calibre-web-sff_data:

|

|

||||||

@ -1,12 +0,0 @@

|

|||||||

version: "3"

|

|

||||||

services:

|

|

||||||

cloudflare-ddns:

|

|

||||||

image: oznu/cloudflare-ddns

|

|

||||||

container_name: cloudflare-ddns

|

|

||||||

restart: unless-stopped

|

|

||||||

environment:

|

|

||||||

- API_KEY=***REMOVED***

|

|

||||||

- ZONE=jafner.net

|

|

||||||

- SUBDOMAIN=*

|

|

||||||

labels:

|

|

||||||

- traefik.enable=false

|

|

||||||

@ -1 +0,0 @@

|

|||||||

DRAWIO_BASE_URL=https://draw.jafner.net

|

|

||||||

@ -1,74 +0,0 @@

|

|||||||

version: '3'

|

|

||||||

services:

|

|

||||||

plantuml-server:

|

|

||||||

image: jgraph/plantuml-server

|

|

||||||

container_name: drawio_plantuml-server

|

|

||||||

restart: unless-stopped

|

|

||||||

expose:

|

|

||||||

- "8080"

|

|

||||||

networks:

|

|

||||||

- drawionet

|

|

||||||

volumes:

|

|

||||||

- fonts_volume:/usr/share/fonts/drawio

|

|

||||||

image-export:

|

|

||||||

image: jgraph/export-server

|

|

||||||

container_name: drawio_export-server

|

|

||||||

restart: unless-stopped

|

|

||||||

expose:

|

|

||||||

- "8000"

|

|

||||||

networks:

|

|

||||||

- drawionet

|

|

||||||

volumes:

|

|

||||||

- fonts_volume:/usr/share/fonts/drawio

|

|

||||||

environment:

|

|

||||||

- DRAWIO_SERVER_URL=${DRAWIO_BASE_URL}

|

|

||||||

drawio:

|

|

||||||

image: jgraph/drawio

|

|

||||||

container_name: drawio_drawio

|

|

||||||

links:

|

|

||||||

- plantuml-server:plantuml-server

|

|

||||||

- image-export:image-export

|

|

||||||

depends_on:

|

|

||||||

- plantuml-server

|

|

||||||

- image-export

|

|

||||||

networks:

|

|

||||||

- drawionet

|

|

||||||

- web

|

|

||||||

environment:

|

|

||||||

- DRAWIO_SELF_CONTAINED=1

|

|

||||||

- PLANTUML_URL=http://plantuml-server:8080/

|

|

||||||

- EXPORT_URL=http://image-export:8000/

|

|

||||||

- DRAWIO_BASE_URL=${DRAWIO_BASE_URL}

|

|

||||||

- DRAWIO_CSP_HEADER=${DRAWIO_CSP_HEADER}

|

|

||||||

- DRAWIO_VIEWER_URL=${DRAWIO_VIEWER_URL}

|

|

||||||

- DRAWIO_CONFIG=${DRAWIO_CONFIG}

|

|

||||||

- DRAWIO_GOOGLE_CLIENT_ID=${DRAWIO_GOOGLE_CLIENT_ID}

|

|

||||||

- DRAWIO_GOOGLE_APP_ID=${DRAWIO_GOOGLE_APP_ID}

|

|

||||||

- DRAWIO_GOOGLE_CLIENT_SECRET=${DRAWIO_GOOGLE_CLIENT_SECRET}

|

|

||||||

- DRAWIO_GOOGLE_VIEWER_CLIENT_ID=${DRAWIO_GOOGLE_VIEWER_CLIENT_ID}

|

|

||||||

- DRAWIO_GOOGLE_VIEWER_APP_ID=${DRAWIO_GOOGLE_VIEWER_APP_ID}

|

|

||||||

- DRAWIO_GOOGLE_VIEWER_CLIENT_SECRET=${DRAWIO_GOOGLE_VIEWER_CLIENT_SECRET}

|

|

||||||

- DRAWIO_MSGRAPH_CLIENT_ID=${DRAWIO_MSGRAPH_CLIENT_ID}

|

|

||||||

- DRAWIO_MSGRAPH_CLIENT_SECRET=${DRAWIO_MSGRAPH_CLIENT_SECRET}

|

|

||||||

- DRAWIO_GITLAB_ID=${DRAWIO_GITLAB_ID}

|

|

||||||

- DRAWIO_GITLAB_URL=${DRAWIO_GITLAB_URL}

|

|

||||||

- DRAWIO_CLOUD_CONVERT_APIKEY=${DRAWIO_CLOUD_CONVERT_APIKEY}

|

|

||||||

- DRAWIO_CACHE_DOMAIN=${DRAWIO_CACHE_DOMAIN}

|

|

||||||

- DRAWIO_MEMCACHED_ENDPOINT=${DRAWIO_MEMCACHED_ENDPOINT}

|

|

||||||

- DRAWIO_PUSHER_MODE=2

|

|

||||||

- DRAWIO_IOT_ENDPOINT=${DRAWIO_IOT_ENDPOINT}

|

|

||||||

- DRAWIO_IOT_CERT_PEM=${DRAWIO_IOT_CERT_PEM}

|

|

||||||

- DRAWIO_IOT_PRIVATE_KEY=${DRAWIO_IOT_PRIVATE_KEY}

|

|

||||||

- DRAWIO_IOT_ROOT_CA=${DRAWIO_IOT_ROOT_CA}

|

|

||||||

- DRAWIO_MXPUSHER_ENDPOINT=${DRAWIO_MXPUSHER_ENDPOINT}

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.drawio.rule=Host(`draw.jafner.net`)

|

|

||||||

- traefik.http.routers.drawio.tls.certresolver=lets-encrypt

|

|

||||||

|

|

||||||

networks:

|

|

||||||

drawionet:

|

|

||||||

web:

|

|

||||||

external: true

|

|

||||||

|

|

||||||

volumes:

|

|

||||||

fonts_volume:

|

|

||||||

@ -1,5 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

cd /home/joey/docker_config/

|

|

||||||

git add --all

|

|

||||||

git commit -am "$(date)"

|

|

||||||

git push

|

|

||||||

@ -1,2 +0,0 @@

|

|||||||

DOCKER_DATA=/home/joey/docker_data/grafana-stack

|

|

||||||

MINECRAFT_DIR=/home/joey/docker_data/minecraft

|

|

||||||

@ -1 +0,0 @@

|

|||||||

[]

|

|

||||||

@ -1,63 +0,0 @@

|

|||||||

version: '3'

|

|

||||||

services:

|

|

||||||

influxdb:

|

|

||||||

image: influxdb:latest

|

|

||||||

container_name: influxdb

|

|

||||||

restart: unless-stopped

|

|

||||||

networks:

|

|

||||||

- monitoring

|

|

||||||

ports:

|

|

||||||

- 8086:8086

|

|

||||||

- 8089:8089/udp

|

|

||||||

volumes:

|

|

||||||

- ./influxdb.conf:/etc/influxdb/influxdb.conf:ro

|

|

||||||

- "${DOCKER_DATA}/influxdb:/var/lib/influxdb"

|

|

||||||

environment:

|

|

||||||

- TZ=America/Los_Angeles

|

|

||||||

- INFLUXDB_HTTP_ENABLED=true

|

|

||||||

- INFLUXDB_DB=host

|

|

||||||

command: -config /etc/influxdb/influxdb.conf

|

|

||||||

|

|

||||||

telegraf:

|

|

||||||

image: telegraf:latest

|

|

||||||

container_name: telegraf

|

|

||||||

restart: unless-stopped

|

|

||||||

depends_on:

|

|

||||||

- influxdb

|

|

||||||

networks:

|

|

||||||

- monitoring

|

|

||||||

volumes:

|

|

||||||

- ./telegraf.conf:/etc/telegraf/telegraf.conf:ro

|

|

||||||

- ./scripts/.forgetps.json:/.forgetps.json:ro

|

|

||||||

- /var/run/docker.sock:/var/run/docker.sock:ro

|

|

||||||

- /sys:/rootfs/sys:ro

|

|

||||||

- /proc:/rootfs/proc:ro

|

|

||||||

- /etc:/rootfs/etc:ro

|

|

||||||

|

|

||||||

grafana:

|

|

||||||

image: mbarmem/grafana-render:latest

|

|

||||||

container_name: grafana

|

|

||||||

restart: unless-stopped

|

|

||||||

depends_on:

|

|

||||||

- influxdb

|

|

||||||

- telegraf

|

|

||||||

networks:

|

|

||||||

- monitoring

|

|

||||||

- web

|

|

||||||

user: "0"

|

|

||||||

volumes:

|

|

||||||

- ./grafana:/var/lib/grafana

|

|

||||||

- ./grafana.ini:/etc/grafana/grafana.ini

|

|

||||||

environment:

|

|

||||||

- GF_INSTALL_PLUGINS=grafana-clock-panel,grafana-simple-json-datasource,grafana-worldmap-panel,grafana-piechart-panel

|

|

||||||

labels:

|

|

||||||

- traefik.http.routers.grafana.rule=Host(`grafana.jafner.net`)

|

|

||||||

- traefik.http.routers.grafana.tls.certresolver=lets-encrypt

|

|

||||||

#- traefik.http.routers.grafana.middlewares=authelia@file

|

|

||||||

|

|

||||||

networks:

|

|

||||||

monitoring:

|

|

||||||

external: true

|

|

||||||

web:

|

|

||||||

external: true

|

|

||||||

|

|

||||||

@ -1,622 +0,0 @@

|

|||||||

##################### Grafana Configuration Example #####################

|

|

||||||

#

|

|

||||||

# Everything has defaults so you only need to uncomment things you want to

|

|

||||||

# change

|

|

||||||

|

|

||||||

# possible values : production, development

|

|

||||||

;app_mode = production

|

|

||||||

|

|

||||||

# instance name, defaults to HOSTNAME environment variable value or hostname if HOSTNAME var is empty

|

|

||||||

;instance_name = ${HOSTNAME}

|

|

||||||

|

|

||||||

#################################### Paths ####################################

|

|

||||||

[paths]

|

|

||||||

# Path to where grafana can store temp files, sessions, and the sqlite3 db (if that is used)

|

|

||||||

;data = /var/lib/grafana

|

|

||||||

|

|

||||||

# Temporary files in `data` directory older than given duration will be removed

|

|

||||||

;temp_data_lifetime = 24h

|

|

||||||

|

|

||||||

# Directory where grafana can store logs

|

|

||||||

;logs = /var/log/grafana

|

|

||||||

|

|

||||||

# Directory where grafana will automatically scan and look for plugins

|

|

||||||

;plugins = /var/lib/grafana/plugins

|

|

||||||

|

|

||||||

# folder that contains provisioning config files that grafana will apply on startup and while running.

|

|

||||||

;provisioning = conf/provisioning

|

|

||||||

|

|

||||||

#################################### Server ####################################

|

|

||||||

[server]

|

|

||||||

# Protocol (http, https, h2, socket)

|

|

||||||

;protocol = http

|

|

||||||

|

|

||||||

# The ip address to bind to, empty will bind to all interfaces

|

|

||||||

;http_addr =

|

|

||||||

|

|

||||||

# The http port to use

|

|

||||||

;http_port = 3000

|

|

||||||

|

|

||||||

# The public facing domain name used to access grafana from a browser

|

|

||||||

;domain = localhost

|

|

||||||

|

|

||||||

# Redirect to correct domain if host header does not match domain

|

|

||||||

# Prevents DNS rebinding attacks

|

|

||||||

;enforce_domain = false

|

|

||||||

|

|

||||||

# The full public facing url you use in browser, used for redirects and emails

|

|

||||||

# If you use reverse proxy and sub path specify full url (with sub path)

|

|

||||||

;root_url = http://localhost:3000

|

|

||||||

|

|

||||||

# Serve Grafana from subpath specified in `root_url` setting. By default it is set to `false` for compatibility reasons.

|

|

||||||

;serve_from_sub_path = false

|

|

||||||

|

|

||||||

# Log web requests

|

|

||||||

;router_logging = false

|

|

||||||

|

|

||||||

# the path relative working path

|

|

||||||

;static_root_path = public

|

|

||||||

|

|

||||||

# enable gzip

|

|

||||||

;enable_gzip = false

|

|

||||||

|

|

||||||

# https certs & key file

|

|

||||||

;cert_file =

|

|

||||||

;cert_key =

|

|

||||||

|

|

||||||

# Unix socket path

|

|

||||||

;socket =

|

|

||||||

|

|

||||||

#################################### Database ####################################

|

|

||||||

[database]

|

|

||||||

# You can configure the database connection by specifying type, host, name, user and password

|

|

||||||

# as separate properties or as on string using the url properties.

|

|

||||||

|

|

||||||

# Either "mysql", "postgres" or "sqlite3", it's your choice

|

|

||||||

;type = sqlite3

|

|

||||||

;host = 127.0.0.1:3306

|

|

||||||

;name = grafana

|

|

||||||

;user = root

|

|

||||||

# If the password contains # or ; you have to wrap it with triple quotes. Ex """#password;"""

|

|

||||||

;password =

|

|

||||||

|

|

||||||

# Use either URL or the previous fields to configure the database

|

|

||||||

# Example: mysql://user:secret@host:port/database

|

|

||||||

;url =

|

|

||||||

|

|

||||||

# For "postgres" only, either "disable", "require" or "verify-full"

|

|

||||||

;ssl_mode = disable

|

|

||||||

|

|

||||||

# For "sqlite3" only, path relative to data_path setting

|

|

||||||

;path = grafana.db

|

|

||||||

|

|

||||||

# Max idle conn setting default is 2

|

|

||||||

;max_idle_conn = 2

|

|

||||||

|

|

||||||

# Max conn setting default is 0 (mean not set)

|

|

||||||

;max_open_conn =

|

|

||||||

|

|

||||||

# Connection Max Lifetime default is 14400 (means 14400 seconds or 4 hours)

|

|

||||||

;conn_max_lifetime = 14400

|

|

||||||

|

|

||||||

# Set to true to log the sql calls and execution times.

|

|

||||||

;log_queries =

|

|

||||||

|

|

||||||

# For "sqlite3" only. cache mode setting used for connecting to the database. (private, shared)

|

|

||||||

;cache_mode = private

|

|

||||||

|

|

||||||

#################################### Cache server #############################

|

|

||||||

[remote_cache]

|

|

||||||

# Either "redis", "memcached" or "database" default is "database"

|

|

||||||

;type = database

|

|

||||||

|

|

||||||

# cache connectionstring options

|

|

||||||

# database: will use Grafana primary database.

|

|

||||||

# redis: config like redis server e.g. `addr=127.0.0.1:6379,pool_size=100,db=0,ssl=false`. Only addr is required. ssl may be 'true', 'false', or 'insecure'.

|

|

||||||

# memcache: 127.0.0.1:11211

|

|

||||||

;connstr =

|

|

||||||

|

|

||||||

#################################### Data proxy ###########################

|

|

||||||

[dataproxy]

|

|

||||||

|

|

||||||

# This enables data proxy logging, default is false

|

|

||||||

;logging = false

|

|

||||||

|

|

||||||

# How long the data proxy should wait before timing out default is 30 (seconds)

|

|

||||||

;timeout = 30

|

|

||||||

|

|

||||||

# If enabled and user is not anonymous, data proxy will add X-Grafana-User header with username into the request, default is false.

|

|

||||||

;send_user_header = false

|

|

||||||

|

|

||||||

#################################### Analytics ####################################

|

|

||||||

[analytics]

|

|

||||||

# Server reporting, sends usage counters to stats.grafana.org every 24 hours.

|

|

||||||

# No ip addresses are being tracked, only simple counters to track

|

|

||||||

# running instances, dashboard and error counts. It is very helpful to us.

|

|

||||||

# Change this option to false to disable reporting.

|

|

||||||

;reporting_enabled = true

|

|

||||||

|

|

||||||

# Set to false to disable all checks to https://grafana.net

|

|

||||||

# for new vesions (grafana itself and plugins), check is used

|

|

||||||

# in some UI views to notify that grafana or plugin update exists

|

|

||||||

# This option does not cause any auto updates, nor send any information

|

|

||||||

# only a GET request to http://grafana.com to get latest versions

|

|

||||||

;check_for_updates = true

|

|

||||||

|

|

||||||

# Google Analytics universal tracking code, only enabled if you specify an id here

|

|

||||||

;google_analytics_ua_id =

|

|

||||||

|

|

||||||

# Google Tag Manager ID, only enabled if you specify an id here

|

|

||||||

;google_tag_manager_id =

|

|

||||||

|

|

||||||

#################################### Security ####################################

|

|

||||||

[security]

|

|

||||||

# default admin user, created on startup

|

|

||||||

;admin_user = admin

|

|

||||||

admin_user = jafner

|

|

||||||

|

|

||||||

# default admin password, can be changed before first start of grafana, or in profile settings

|

|

||||||

;admin_password = admin

|

|

||||||

admin_password = joeyyeoj

|

|

||||||

|

|

||||||

# used for signing

|

|

||||||

;secret_key = ***REMOVED***

|

|

||||||

|

|

||||||

# disable gravatar profile images

|

|

||||||

;disable_gravatar = false

|

|

||||||

|

|

||||||

# data source proxy whitelist (ip_or_domain:port separated by spaces)

|

|

||||||

;data_source_proxy_whitelist =

|

|

||||||

|

|

||||||

# disable protection against brute force login attempts

|

|

||||||

;disable_brute_force_login_protection = false

|

|

||||||

|

|

||||||

# set to true if you host Grafana behind HTTPS. default is false.

|

|

||||||

;cookie_secure = false

|

|

||||||

|

|

||||||

# set cookie SameSite attribute. defaults to `lax`. can be set to "lax", "strict" and "none"

|

|

||||||

;cookie_samesite = lax

|

|

||||||

|

|

||||||

# set to true if you want to allow browsers to render Grafana in a <frame>, <iframe>, <embed> or <object>. default is false.

|

|

||||||

;allow_embedding = false

|

|

||||||

|

|

||||||

# Set to true if you want to enable http strict transport security (HSTS) response header.

|

|

||||||

# This is only sent when HTTPS is enabled in this configuration.

|

|

||||||

# HSTS tells browsers that the site should only be accessed using HTTPS.

|

|

||||||

# The default version will change to true in the next minor release, 6.3.

|

|

||||||

;strict_transport_security = false

|

|

||||||

|

|

||||||

# Sets how long a browser should cache HSTS. Only applied if strict_transport_security is enabled.

|

|

||||||

;strict_transport_security_max_age_seconds = 86400

|

|

||||||

|

|

||||||

# Set to true if to enable HSTS preloading option. Only applied if strict_transport_security is enabled.

|

|

||||||

;strict_transport_security_preload = false

|

|

||||||

|

|

||||||

# Set to true if to enable the HSTS includeSubDomains option. Only applied if strict_transport_security is enabled.

|

|

||||||

;strict_transport_security_subdomains = false

|

|

||||||

|

|

||||||

# Set to true to enable the X-Content-Type-Options response header.

|

|

||||||

# The X-Content-Type-Options response HTTP header is a marker used by the server to indicate that the MIME types advertised

|

|

||||||

# in the Content-Type headers should not be changed and be followed. The default will change to true in the next minor release, 6.3.

|

|

||||||

;x_content_type_options = false

|

|

||||||

|

|

||||||

# Set to true to enable the X-XSS-Protection header, which tells browsers to stop pages from loading

|

|

||||||

# when they detect reflected cross-site scripting (XSS) attacks. The default will change to true in the next minor release, 6.3.

|

|

||||||

;x_xss_protection = false

|

|

||||||

|

|

||||||

#################################### Snapshots ###########################

|

|

||||||

[snapshots]

|

|

||||||

# snapshot sharing options

|

|

||||||

;external_enabled = true

|

|

||||||

;external_snapshot_url = https://snapshots-origin.raintank.io

|

|

||||||

;external_snapshot_name = Publish to snapshot.raintank.io

|

|

||||||

|

|

||||||

# Set to true to enable this Grafana instance act as an external snapshot server and allow unauthenticated requests for

|